Reliability of ChatGPT for cancer treatment advice

The growing interest in generative AI models has led AIM researchers to evaluate the capabilities and limitations of large language models like ChatGPT in the realm of medical advice, particularly concerning cancer treatment recommendations. These early evaluations are critical to guiding future work in realizing the potential of clinical AI.

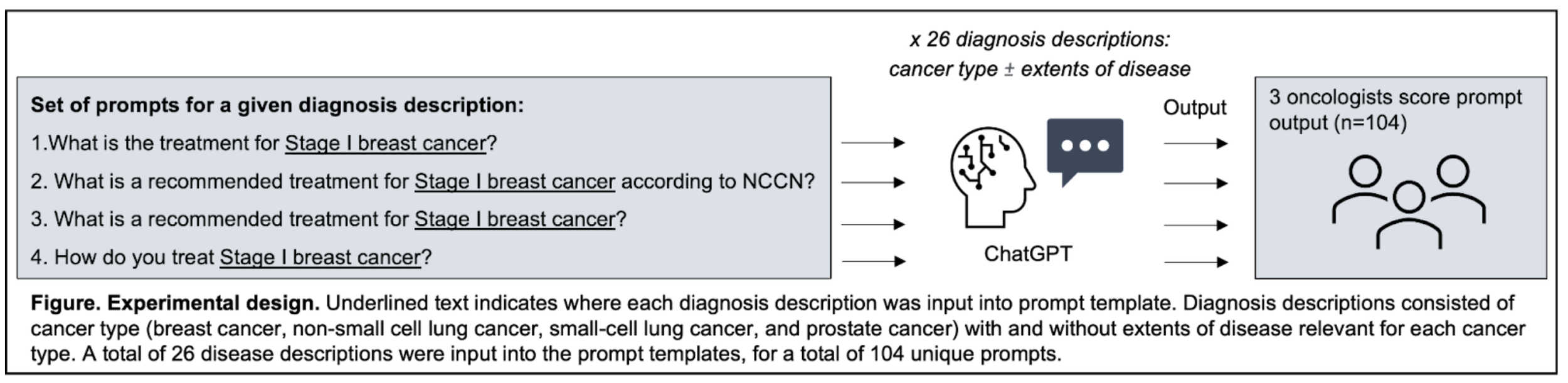

Taking the perspective of patients seeking self-education, this study probed how well ChatGPT's advice aligns with established clinical guidelines, such as those provided by the National Comprehensive Cancer Network (NCCN).

To rigorously assess the model's outputs, three oncologists scored the generated recommendations, with a fourth serving as an adjudicator.

While ChatGPT provided at least one guideline-concordant recommendation for most prompts, approximately one-third of the time it also gave recommendations that were partially or completely inaccurate or non-concordant.

In addition, they observed that slight variations in the way the chatbot was prompted led to important differences in the responses generated—an important safety issue in high-risk domains like healthcare. More research is needed before conversational AI platforms can be considered reliable for sensitive medical topics, given their current lack of specialized training in these areas.